Are we enslaving ourselves into our own created God?

I am not exactly a religious type of person, but I think there’s a point where our humanity should be put at stake.

We are enslaving ourselves into our own God

It’s been a year or so since I wrote my last post about Artificial Intelligence and its branches such as Machine Learning and Deep Learning. As a technology enthusiast I am fascinated and intrigued by the idea of a virtual assistant like Cortana from Halo or Mass Effect’s VI (Virtual Intelligence). But as things are getting more and more advanced, the achievements look like we’re enslaving ourselves to technological God we are creating ourselves.

I am not exactly a religious type of person and I am not going to talk about whether it is good or wrong to have faith in Someone above us (which - for the record - I do), but I think there’s a point where our humanity should be put at stake. Let me share you my point.

Technology is a tool.

It’s a hammer: you can use it for good or bad. You can build a shelter, or you can kill someone with it. It doesn’t make it immoral or bad, it’s your use that determines its fate.

Today’s AI development state is still a tool. It’s not capable of determining if its behavior is either good or bad, it’s an incredibly advanced and well performing statistical tool. A spreadsheet on steroids if you prefer.

With few exceptions, we are starting to use this hammer to repeatedly hit our fingers, rather than building something useful for a greater good. A humanity oriented good.

How is that a hammer is enslaving us?

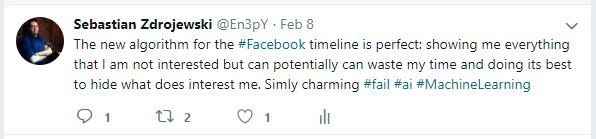

One of the most important advancements in research, development and application of machine learning is turning us into products on a shelf. I am talking about the applications that profile and display ads as we visit websites or, my favorite one, timelines on social media sites.

Massive amount of information from different sources are gathered and computed in near real time to show advertisements of things that we might be interested to. Data collected while visiting a site, searching for specific keywords or shopping online. Recently it’s been disclosed that even your off-line shopping preferences are shared to such systems, so you can get even more supposedly interesting advertising that will condition your choices or likes.

Then you have a timeline of your favorite social network: Facebook or LinkedIn shows you a timeline of contents that are trending rather than your follower’s updates, unless you explicitly want it. And to say it clearly, it takes some time to find the option to see your latest updates rather than the “suggested timeline”. You just get a timeline of stuff that an algorithm determined should be interesting for you. To condition your likes and understanding of what is going on. You won’t know that a page you are following has done something important, possibly because it’s not trending.

So, what is “trending”? Trends are results of statistical calculation, a highly and complex calculation that needs an adaptive algorithm to perform it in a short time.

Marketing researches used to take weeks to be performed in past: you had to gather information from various sources, collect it, get help from spreadsheets, but the whole was at last the result of human evaluation and processing. Today there’s too much data from too many sources. We call it Big Data and it goes beyond our ability of calculation.

So, you feed a computer with Big Data and it analyzes the information collected, updating its evaluations based on the data as it comes.

An adaptive algorithm is like when you are walking, and it starts raining: you want to keep going, so you buy an umbrella. You adapt to the situation.

Trends are results of highly complex statistics ran against massive sets of information, so when something is trending, and you somehow had an affinity to that trend, you start getting advertising or contents based on something that might be relevant to you. To manipulate your feelings, to turn your interest on something that will either hold you swiping your social network’s timeline and see advertisements. And click on them. And turning yourself into a product.

We are turning ourselves, by our own will, into products.

If you don’t agree with what I said, take as an example Cambridge Analytica. The company, by using complex and (quite) effective Machine Learning algorithms was able to manipulate people’s choices to allegedly change the results of events like american President’s elections or Brexit vote.

And the applications of todays’ so called AI gets even worse.

Fake News

AI algorithms and implementations have also made their appearance in Fake News and media manipulation. Take Deep Fakes for example: in my opinion, that is one of the most clear examples of how things are going in the wrong direction. Take this video on YouTube from 2017

https://www.youtube.com/watch?v=AmUC4m6w1wo

Deep Fakes processes a video stream and replaces it with an altered version in near real time, allowing you to create a fake video that looks entirely as real as the original one. Everything you may have seen in SciFi movies where mass media channels spread fake videos becomes more real than ever. How will you distinguish truth from fake?

AI algorithms have been applied to analyze published information and determine if it was either true or false, to mitigate the problem of Fake News.

But how would that analysis be performed? By online research. And this, again, brings me to the point: we are still talking about a sophisticated statistical system.

What if a Fake News is trending?

Back again on social media with another example: I am quite involved in topics related to computers and the Blockchain technology, so I am not too much surprised when one or more contents pop up in my timeline about the topic. But when it’s scam and it passes as a sponsored content, a ring bells in my head.

I have reported different times posts where Elon Musk allegedly has invested millions of dollars into startups that will disrupt the way we are living. Again, I am talking about a sponsored content, so someone has paid the social media to spread that scam as much as possible. If you get to the site to read the content, you see an article well written with little or no real meaning, that invites you to invest and/or purchase some service or participate in the alleged ICO (Initial Coin Offering).

The AI system has then determined the content was not a Fake News, and it allowed its publication. It also did not violate any of the guidelines for advertising on the social media either. Where is the “I” in that? An “Intelligent” system might have crawled the website, checked its registration date, and searched for reliable sources to determine whether the information was true or not. Instead it spread the information to as many people as possible, in order to increase its reach and engagement. An engagement that, again, turned your visit into money by showing you more ads (which are tailored on you) as well as possibly convinced you to invest yourself in that activity.

A little digression: did you see a banner or advertisement on en3py.net?

Computer Vision

Computer Vision is that part of computer sciences that allows a program to see and act based on what the computer sees. CV and Machine Learning combined allows different applications, such as picture identification and captioning, or content moderation. Social media giants use CV and ML combined to automate the moderation of images and videos uploaded on their sites. Since the amount of contents uploaded daily, human supervision is impossible, so “AI” (without the “I”) comes in handy to perform the duty.

Take a look on what is going on every second on Social Media

One application of the CV and ML is captioning a picture: its content can be therefore looked for using a search engine (textually). There are applications available on Amazon AWS or Microsoft Azure that allows you to automatically caption your images using a simple API interface, so if you take a picture of yourself, the system will tell you it’s “a man wearing a black t-shirt and holding a guitar”. But it won’t be able to determine whether you’re being sad or singing a sad song, it won’t be able to determine if it’s a real man or a 1:1 scale print of a man or a poster. Perhaps it’s still too early for that.

The Computer Vision and the alleged capability of it to determine the content of an image has been recently been evaluated by EU’s Copyright reform. The Article 13 mention technologies that can intercept and prohibit the upload of “copyright infringing material”. Whereas this is a bit off topic for this post of mine (you can find more on Rights Chain’s blog about the matter) the interesting part is that someone really believes computers have reached such level of “intelligence” and is capable of judging the content of an image and act as a jury for its fate. Long story short: it is not, and no such technology exists as of today applied to copyright, with results that vary between comic and tragic.

Also, another application of CV has been checking for “erotic” or nudity. NSFW content is a great concern for globally accessible platforms, so why not having an “Artificial Intelligence” system that classifies the content and handles the dirty job? Of course, here as well results can be silly, such as the case of Facebook censoring king cake babies for nudity (and how wrong is that).

https://www.nola.com/eat-drink/2019/01/facebook-censors-king-cake-babies-for-nudity-no-kidding.html

Information Security

So here we are again in my habitat: Information Technology. There’s a spread of how Artificial Intelligence is implemented to secure systems. Open any magazine or online service provider and try to find someone who is not using AI to power up something. Antivirus software, firewalls, systems that “listen to your network and promptly acts to protect you”. After Blockchain, AI might become the next hype. Everything is AI, nothing is “I”.

The idea of having “something” that is doing things for us is appealing, but not knowing how it does that is dangerous. A statistical system… I beg your pardon: an AI driven correlation system performs massive statistical analysis from various sources to determine whether something is normal or is not.

Let me bring this to the extreme before my conclusion.

If majority of people started killing each other, would that become “normal” and therefore accepted?

Public Security and Policing

AI is starting to be applied to policing and public security. That is a noble goal, but as I already said, it’s a hammer. So far, I’ve seen two different approaches on this matter.

One is highly statistical: data is gathered from the Internet (like the Holy Grail of the truth) to determine whether an urban area might be (or not be) at risk so a Police department can deploy forces to that area. But how could such a system be reliable? Based on historical data of that area, but socio-political situation is fluid and changes over time. We can see how habits changed in past 10 years, a longer span of (statistical) data might even condition the results of the analysis. So, on one side we have an “Artificial Intelligence” driven system. Guess which use of the hammer is this.

On the other side there are solutions, of which I had the luck of getting to see it at work, that used a Machine Learning concept before the term became so wide spread. The system is a highly complex and adaptive statistical analysis software, but the data in it is fruit of investigation and human intelligence. The system correlates the criminal events of individuals or groups and is capable of associating series of crimes to the people who committed them. The evolution of this system, as it turned out after few years, was the ability of the software of predicting crimes. And just to cool down your spirits, no police forces were not preventing crimes by arresting offenders before they ever knew they would commit a crime. But the system could pinpoint, with an incredible precision, the possible targets of a crime, so police forces could arrest the offenders as they were committing the crime.

One big difference between the two systems, that might not come to sight.

In an unattended “learning” environment, things could go bad. Fake news, fake posts, fake accounts could start posting contents that will higher the levels of attention in an urban area, and the system could trigger an alert. Without a validation process, such event might become a decoy. Isn’t that what is going on your timelines lately?

Also, an unattended learning system might lack completely of vital elements like morale and ethics. We can’t allow a system to consider killing something normal, then not considering it as a threat. Before things gets worse, and I am not talking about a singularity, we need to put the “intelligence” in what we are doing. Perhaps we might become less products on a shelf and get our free will back into our hands. Possibly for a better future.

25/02/2019 00:00:00